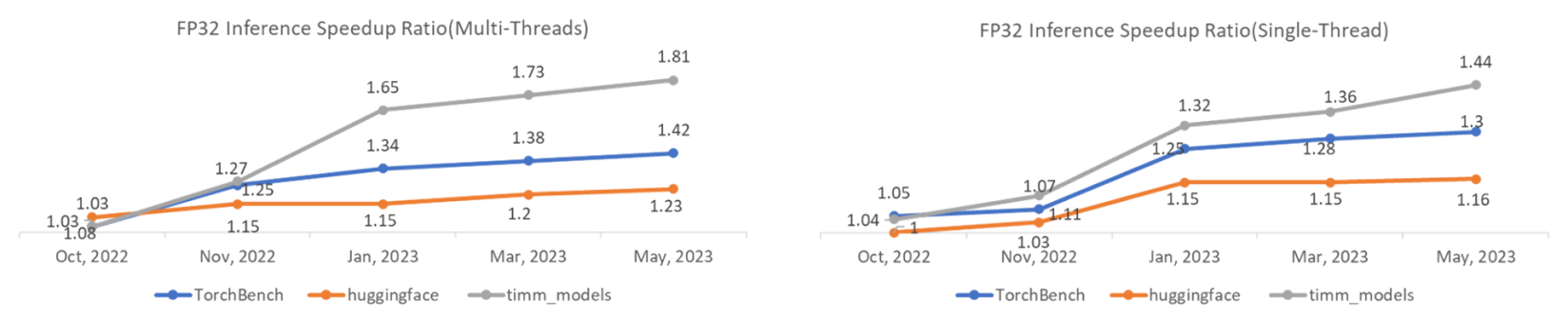

TorchDynamo Update: 1.48x geomean speedup on TorchBench CPU Inference - compiler - PyTorch Dev Discussions

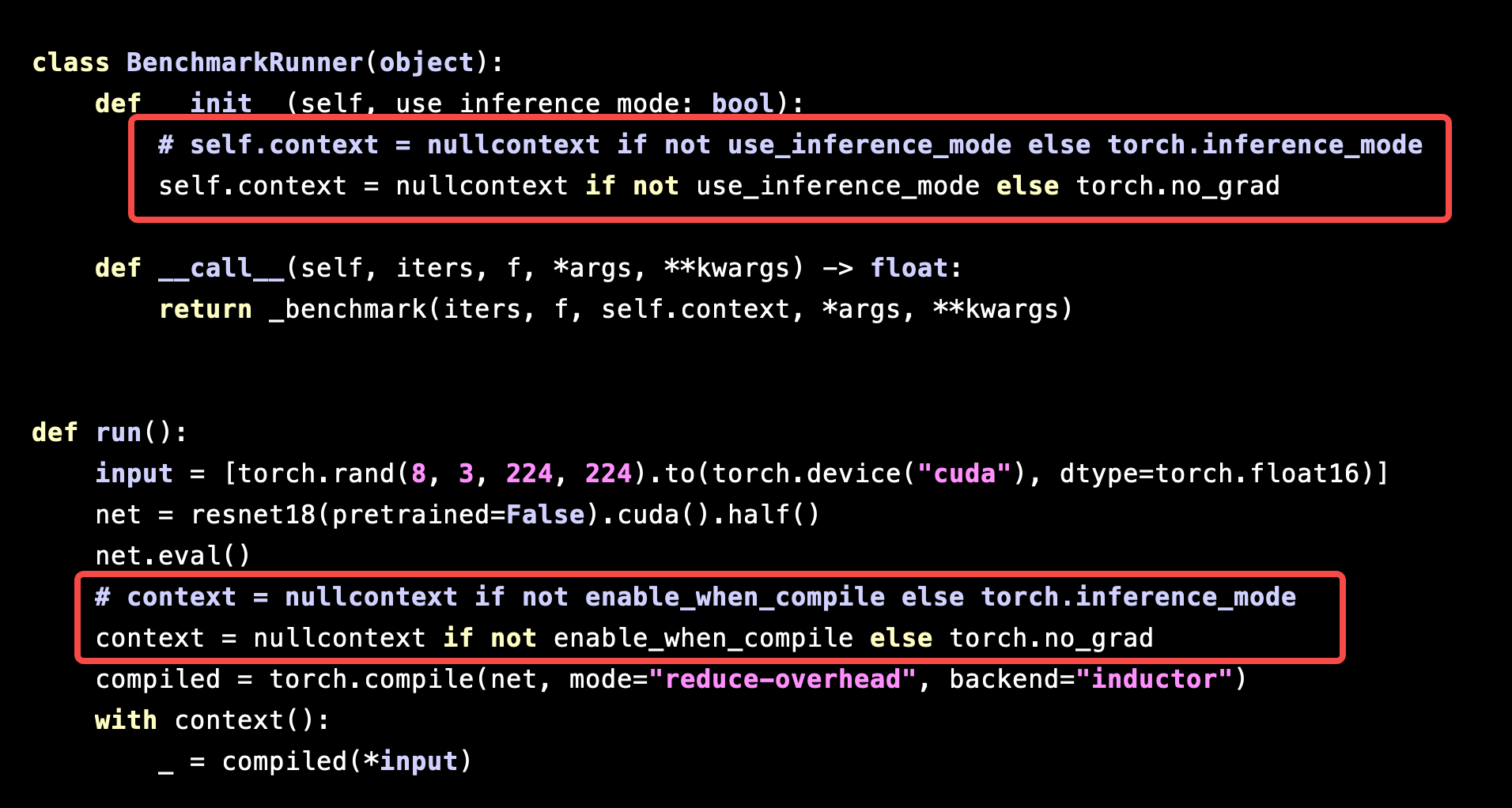

Performance of `torch.compile` is significantly slowed down under `torch.inference_mode` - torch.compile - PyTorch Forums

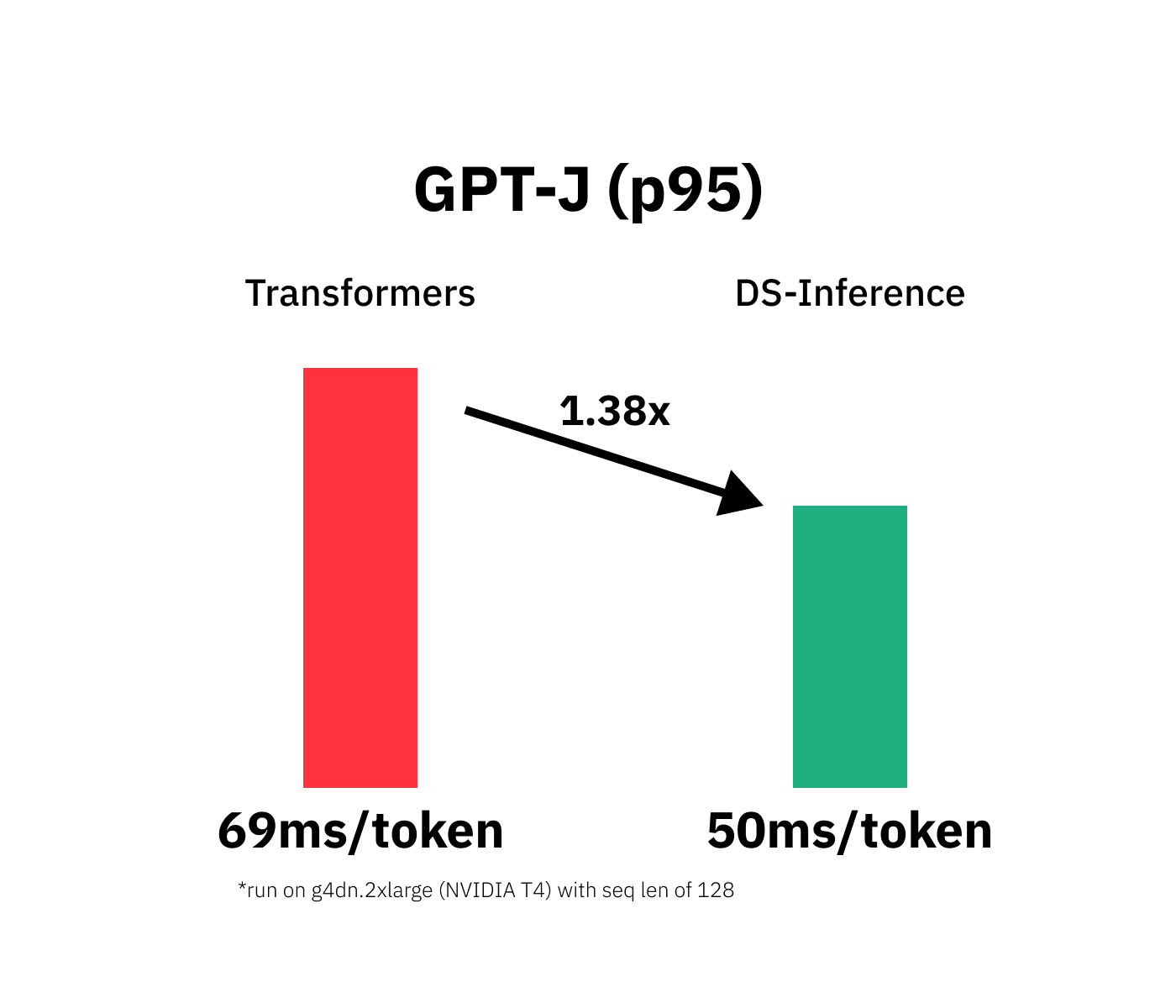

TorchServe: Increasing inference speed while improving efficiency - deployment - PyTorch Dev Discussions

Inference mode complains about inplace at torch.mean call, but I don't use inplace · Issue #70177 · pytorch/pytorch · GitHub

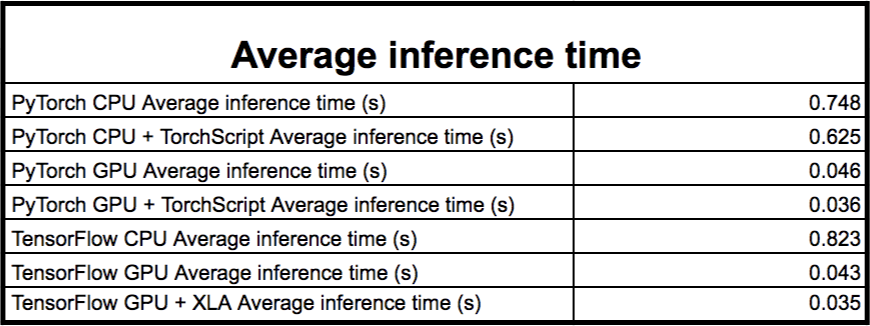

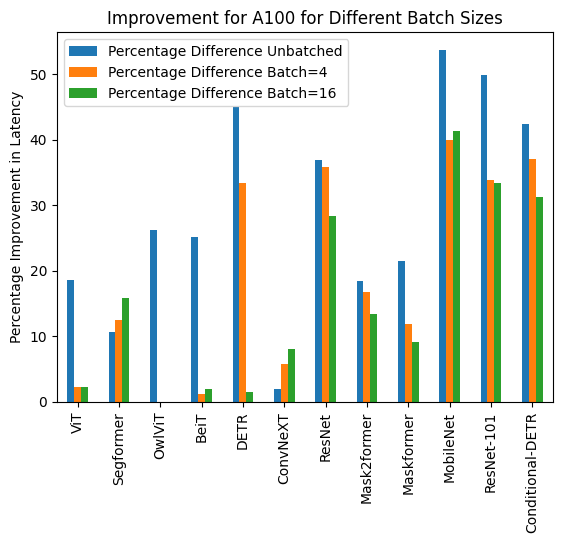

Deployment of Deep Learning models on Genesis Cloud - Deployment techniques for PyTorch models using TensorRT | Genesis Cloud Blog